Evolving dev = evolving risk

Unlike traditional software, which operates through deterministic, rule-based logic, AI-native apps rely on inherently non-deterministic workflows driven by large language models (LLMs). These “black box” systems — the “brain” of AI agents — are introducing unpredictable behaviors that shift how we think about securing software. Legacy AppSec approaches — built around static rules and predictable patterns — can’t handle the dynamic nature of AI. Instead of relying on rigid, castle-and-moat defense, modern security strategies need to be adaptive, continuously monitoring AI behavior for anomalies and emphasizing upstream controls through better threat modeling and deeper testing.

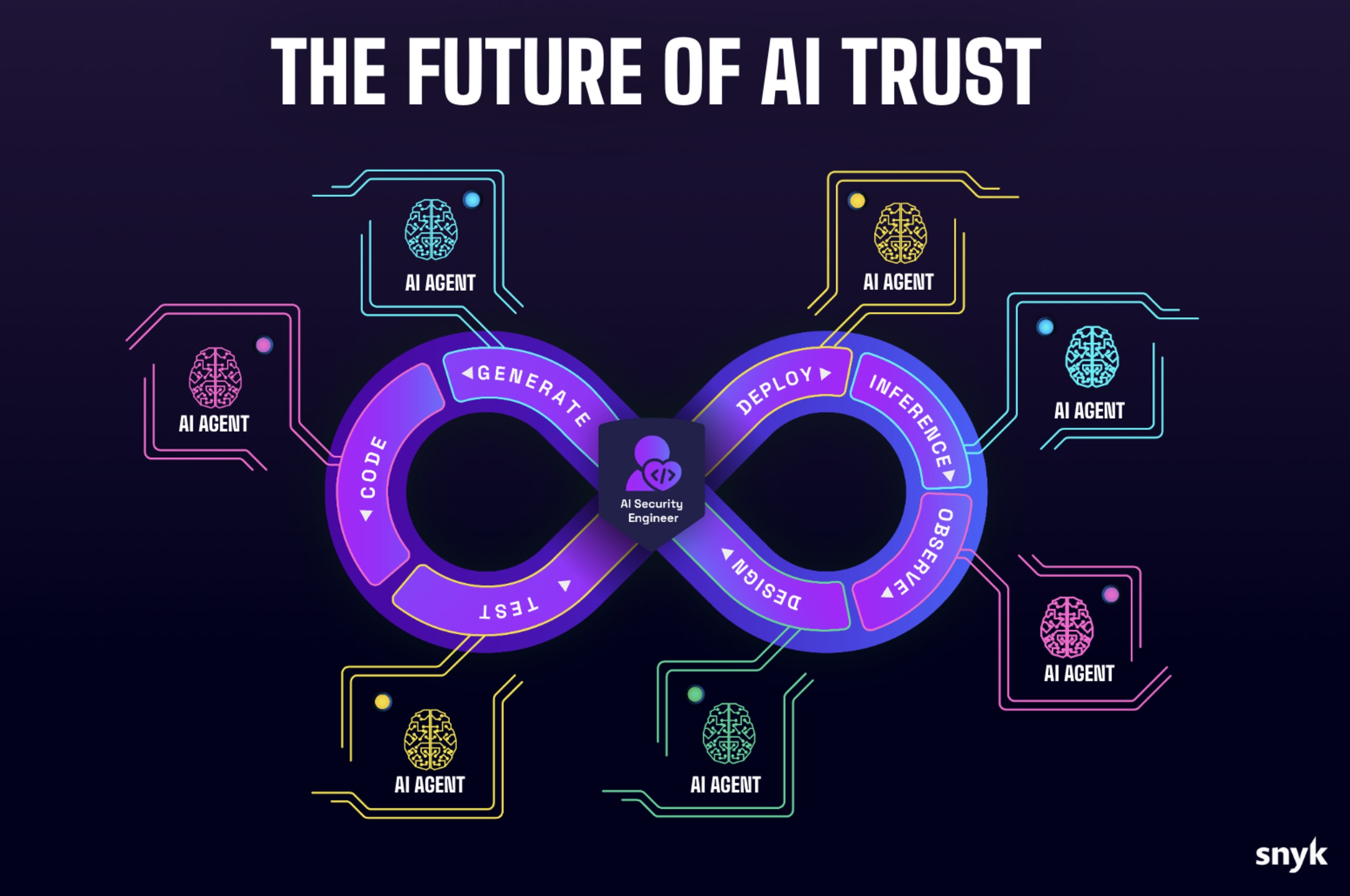

Securing AI-native apps requires coverage across the full AI development lifecycle — not just focusing on the extreme left, before the point of code generation, or on the extreme right, near runtime. Instead, security must be continuous and throughout the lifecycle. For example, before code is generated, spec-based and vibe coding necessitate the earliest threat modeling and memory guardrails to filter out risky prompts. At the point of code and model generation, security must address risks of data poisoning or package hallucination with AI Security Posture Management (AI-SPM), for instance. And to the right, near runtime, LLM and agent red teaming need to address threats like prompt injection and data exfiltration. Since risk can emerge at any stage, security must be embedded early and persistently evolve alongside the AI itself.

The Future of AI Trust: Introducing Snyk Labs

Snyk continues to stay at the forefront of application security, embedding AI-powered and agentic capabilities throughout its AI Trust Platform, so our customers can harness a new level of efficiency to develop fast and stay secure. Now, as we look to the future, we’ve founded Snyk Labs, a new research arm focused on advancing AI security. By researching new AI threats like those mentioned above, incubating new solutions for securing AI models and AI-native apps, and building coalitions, Snyk Labs is shaping the new frontier of AppSec, with security practices that are both adaptive and continuous.

One of the first outputs of Snyk Labs incubations is focused on AI-SPM, beginning with visibility into AI models and components of AI agents that are running throughout an environment. We are focused on helping organizations create an AI BoM for a full view of their individual threat landscape. However, visibility is just the beginning. Just as Snyk delivered security research and deep ML expertise to create the world’s leading open source vulnerability database — OEMed by some of the biggest companies in the world — Snyk Labs research is compiling the world’s first and most comprehensive AI Model Risk Registry.

Furthering our research, Snyk has joined CoSAI and is supporting OWASP on new LLM security standards, helping the community think about new ways to model AI-native threats. As we look forward to delivering these and other innovations in the coming months, we invite you to engage with our latest research and demos or apply to be a hands-on design partner.

GenAI is delivering the future, and we’re here for it.

Snyk has always known that strategies for securing software must stay one step ahead: from shifting left to put application security in the hands of developers, to embedding security into DevSecOps practices, and now, to creating GenAI apps that are secure by default. Our commitment to customers has always been to enable a secure future of development, and Snyk Labs is continuing to uphold that commitment by enabling the emerging role of AI Security Engineering. Together, we will execute on our vision of AI Trust: where agents are embedded at every step, security will have continuous visibility, AI-native threats will be uncovered (and fixed) in real time, and code can remain secure at the speed of AI. Through this, we’re helping customers to secure their futures, embracing AI-native apps not just to keep up, but to unlock entirely new business capabilities that were once out of reach.