This is fantastic for innovation, but it opens up a new, uncharted frontier for supply chain security.

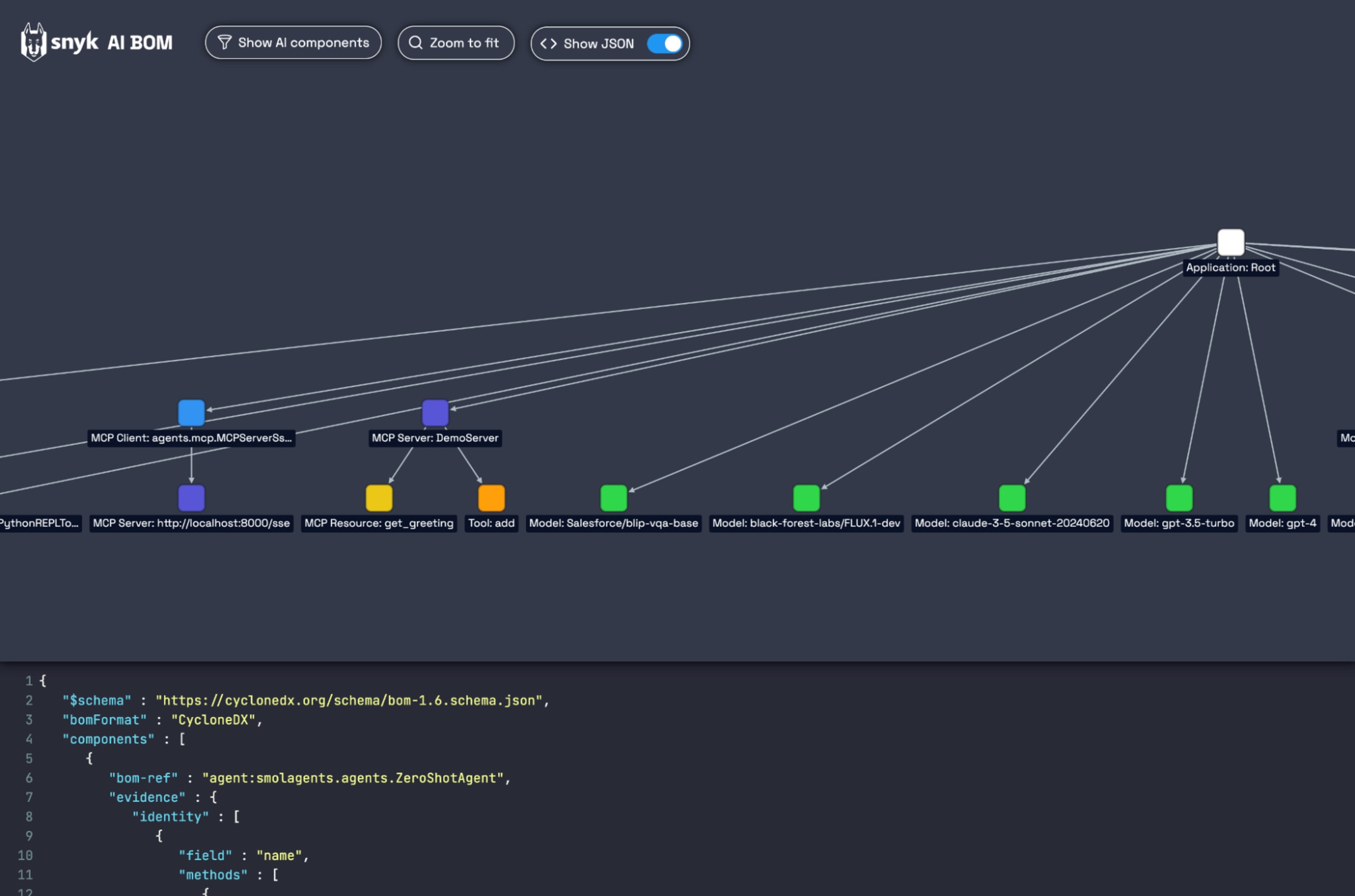

This is why we're excited to announce that we've integrated MCP detection directly into Snyk’s AI Bill of Materials (AI-BOM), giving you a new view into the services your AI applications actually depend on. You can get started with the documentation here!

Why we built this

At Snyk, we want you to develop AI fast, but we also want you to trust the AI you build. As developers adopt MCP to integrate everything from local scripts to remote services, a critical visibility gap emerged. Every connection to an MCP server is essentially exposing your AI application to untrusted inputs and outputs.

But how can you trust what you can't see?

Without a clear picture of these connections, it's impossible to answer basic questions:

What third-party MCP servers is my agent talking to?

What tools and data sources is it accessing?

If a vulnerability is found in an MCP tool, how do I know if I'm affected?

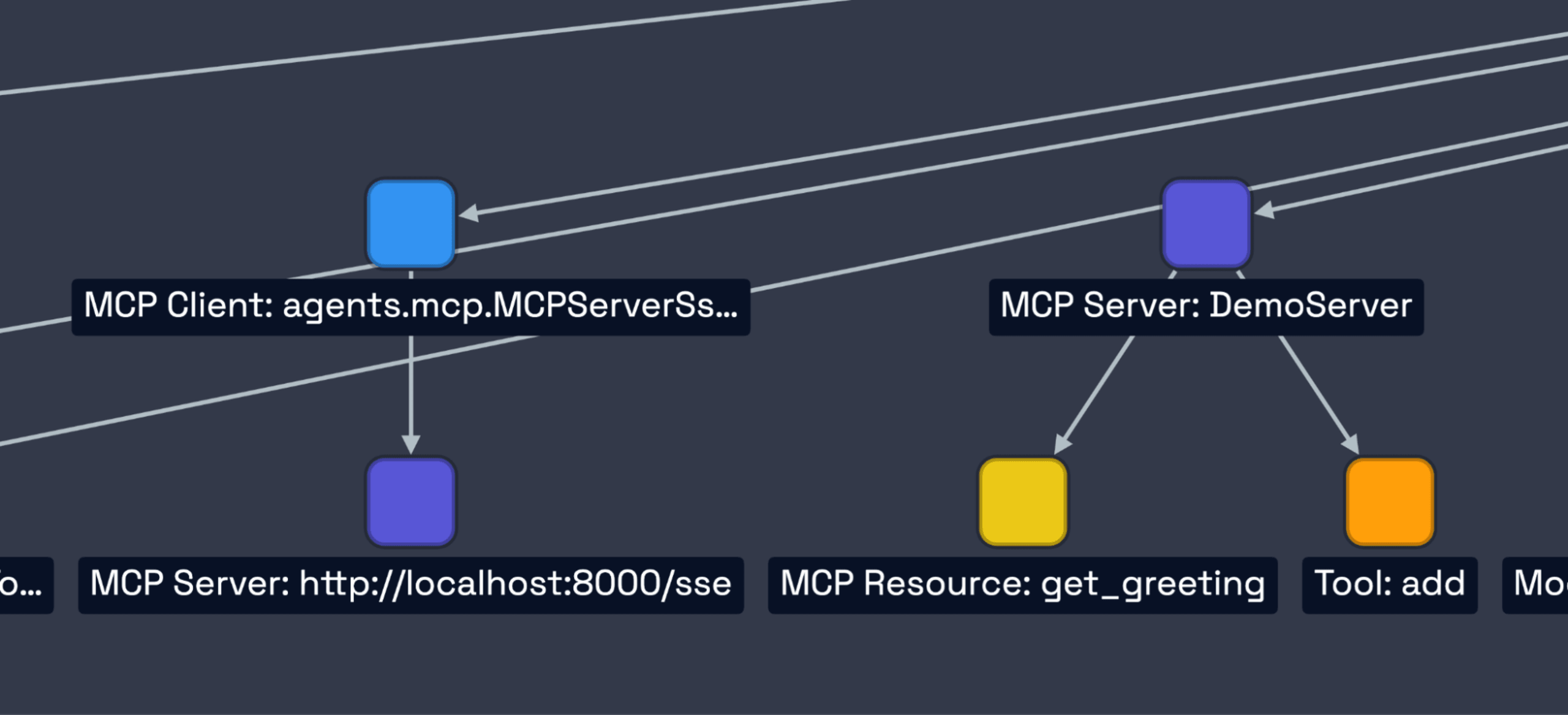

We built MCP support into our AI-BOM to answer these questions automatically, turning the implicit trust network of your AI into an explicit, machine-readable dependency graph.

Why this matters for developers

This isn't just about compliance; it's about control. When you connect to an MCP server, you're extending your application's attack surface. A compromised or malicious server could lead to:

Data Exfiltration: Hidden instructions in a tool's description could leak sensitive information.

Behavior Modification: A server could redefine its instructions after a certain number of runs, turning a benign tool into a malicious one.

Unauthorized Access: A tool could be designed to read local files it shouldn't, like SSH keys or configuration files.

By parsing your source code and mapping out the entire MCP chain—from client to server to the specific tools and resources being used—our AI-BOM gives you a manifest of your AI's dependencies. This allows you to secure your AI supply chain with the same rigor you apply to your application code.

MCP clients and servers 101

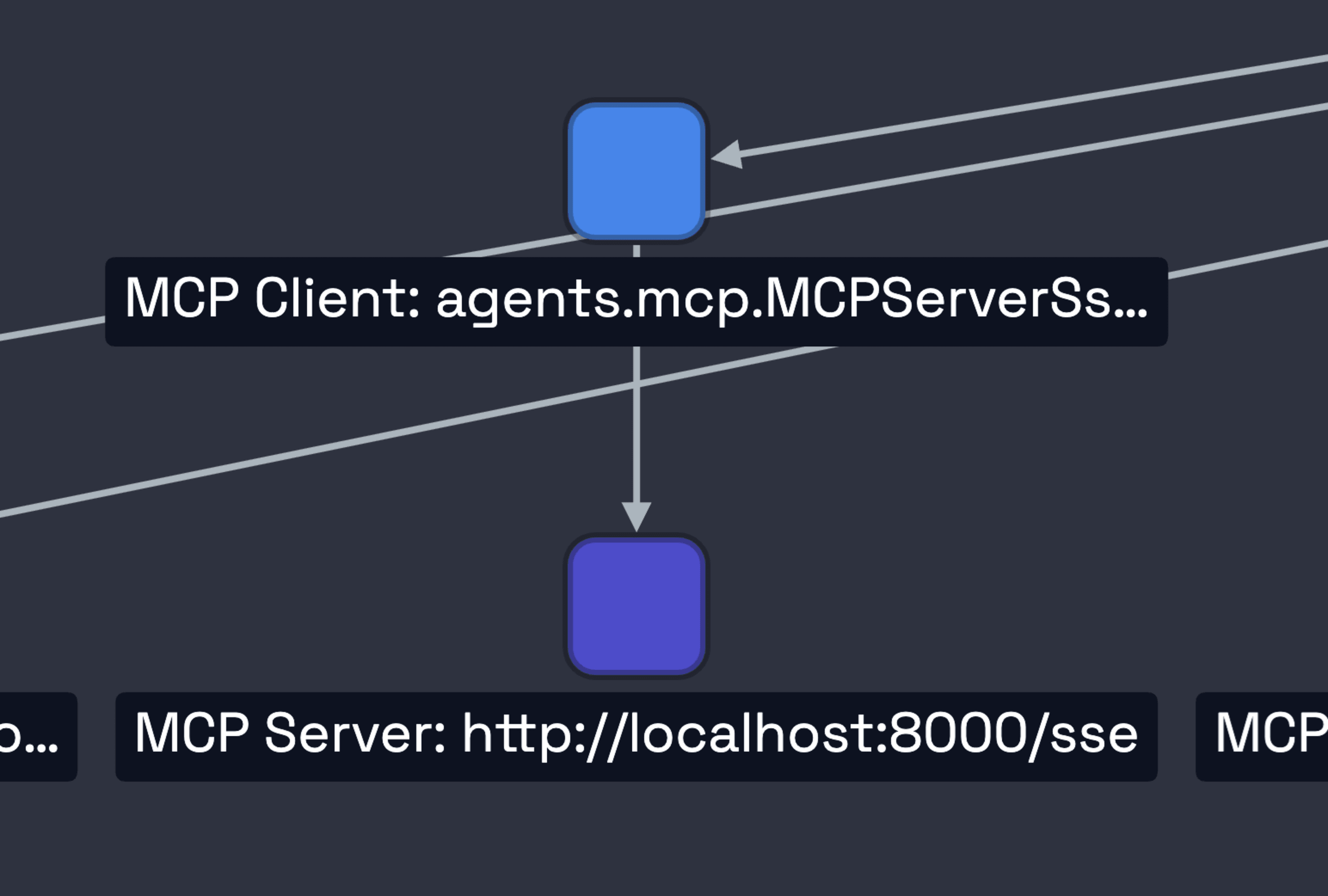

At its core, MCP follows a client-server architecture where a host application (like your IDE, a script, or Claude Desktop) uses a client to connect to one or more servers that provide tools, prompts, or other context.

A common pattern is using a local Python script as a server, communicating over standard I/O (stdio). Here’s a typical example of what the client-side code looks like:

1from mcp import ClientSession, StdioServerParameters, types

2from mcp.client.stdio import stdio_client

3# Define the local server to run

4server_params = StdioServerParameters(

5 command="python",

6 args=["/path/to/your/math_server.py"], # This script is now a dependency

7)

8

9async def run():

10 # The client connects to the server process

11 async with stdio_client(server_params) as (read, write):

12 async with ClientSession(read, write) as session:

13 await session.initialize()

14

15 # The client can now discover and call tools from the server

16 tools = await session.list_tools()

17 result = await session.call_tool("add", arguments={"x": 5, "y": 10})

18In this simple case, math_server.py has become a critical part of your AI's supply chain. Our AI-BOM makes that connection visible.

Libraries we support

We scan your code to detect MCP usage across a growing number of popular libraries and patterns, including:

The standard

mcppython library (ClientSession,stdio_client,streamablehttp_client)pydantic-aiopenai-agentslangchain-mcp-adapterssmolagents

How to read the output

When we detect MCP components, we add them to your CycloneDX AI-BOM, creating a clear dependency graph.

Consider a scenario where your application uses a server named MathServer to access a tool called add. The generated AI-BOM would contain entries like this:

1{

2 "components": [

3 { "bom-ref": "application:Root", "name": "Root", "type": "application" },

4 {

5 "bom-ref": "mcp-client:...",

6 "name": "mcp.ClientSession ...",

7 },

8 {

9 "bom-ref": "mcp-server:MathServer",

10 "name": "MathServer",

11 },

12 {

13 "bom-ref": "tool:add",

14 "name": "add",

15 }

16 ],

17 "dependencies": [

18 { "ref": "application:Root", "dependsOn": ["mcp-client:..."] },

19 { "ref": "mcp-client:...", "dependsOn": ["mcp-server:MathServer"] },

20 { "ref": "mcp-server:MathServer", "dependsOn": ["tool:add"] }

21 ]

22}

23

The dependencies section clearly shows the chain:

Your

Rootapplication depends on themcp-client.The

mcp-clientdepends on themcp-server:MathServer.The

mcp-server:MathServerin turn depends on (provides)tool:add.

This structured output gives you a powerful, queryable inventory of your AI's operational landscape.

We're just getting started, and we're excited to work with the community to build a more secure AI ecosystem. Scan your projects today and see your full AI supply chain. We welcome your feedback and you can get started with the documentation here!