From Hello World to high risk: the AI agents are live

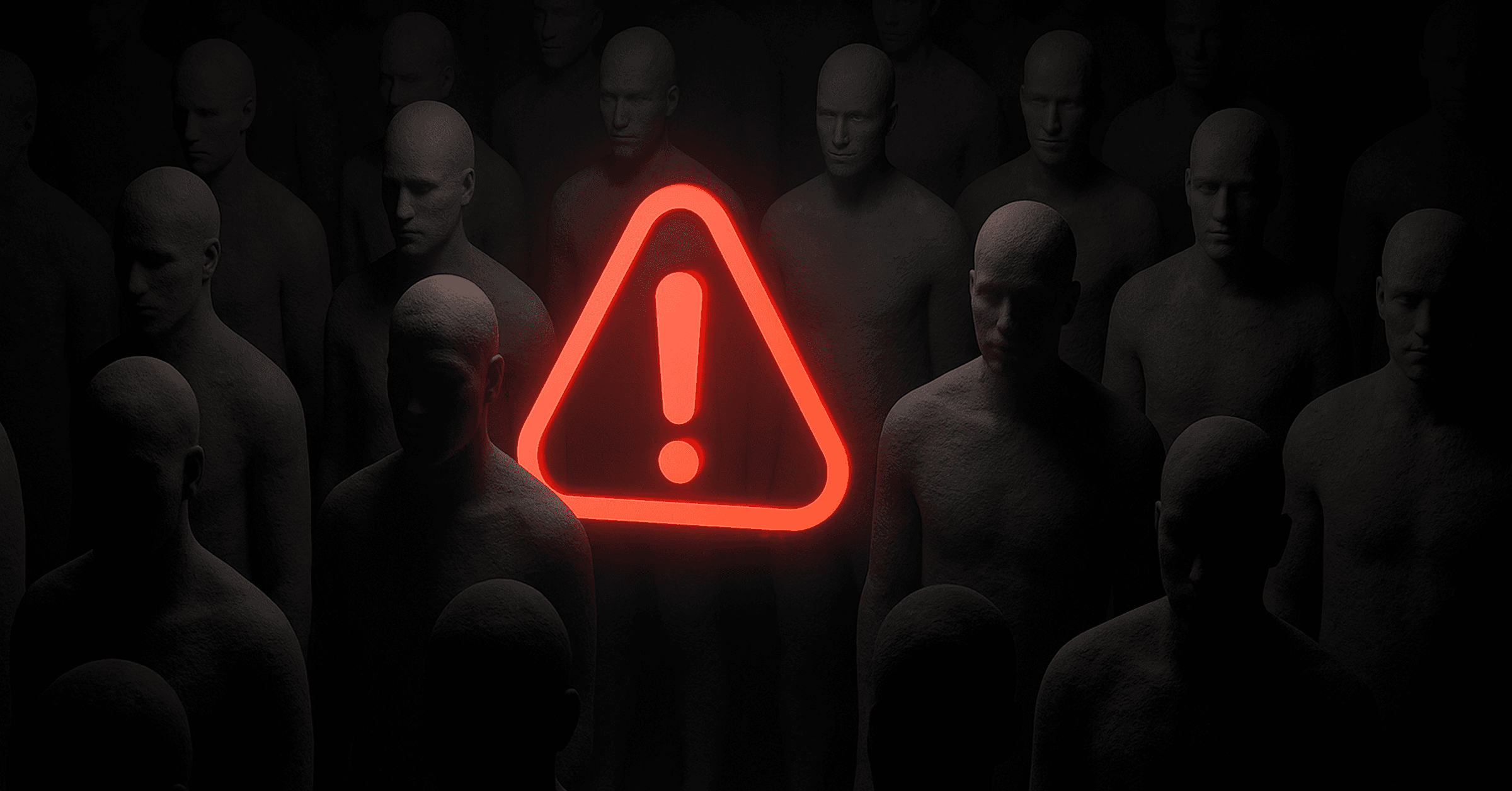

This isn’t about bugs in code. It’s about bugs in conversation. The moment agents started responding to natural language, they inherited every risk that comes with it. Think of prompt injections hidden in user inputs. Malicious payloads embedded in seemingly harmless docs or API responses. Adversarial prompts crafted to siphon sensitive data. These aren’t hypotheticals. They’re already being exploited. And most of your tools? They don’t speak the language to stop it.

That’s why Snyk has created a prototype red teaming engine for the LLM layer. It’s dev-first, security-savvy, and unapologetically focused. You define the threat — say, data leakage or unauthorized code execution. This tool launches targeted, real-world simulations to see if your agent takes the bait.

It’s the rigorous testing LLM systems need, with the developer speed they demand.

Snyk Labs Early Prototype: LLM Red Teaming

Security can’t just scan code anymore — it has to read between the lines

Traditional software security? That playbook’s well-worn — static analysis, firewalls, runtime protection. With LLM agents, the rules change.

These agents don’t just follow code — they follow language. And that means new rules, new risks. A single prompt can trigger a database query, summarize sensitive content, or call an API, not because it was explicitly programmed to, but because the user asked for it. That makes behavior flexible and exploitable.

Traditional AppSec tools can’t track these interactions. They can’t interpret prompts, model agent memory, or understand how a clever sentence can lead to prompt injection, tool misuse, or data leakage.

Securing LLM agents means thinking like an adversary, in their language. Red teaming must simulate real prompts, model full conversations, and test how agents behave in context. It’s a language-native challenge, and that’s exactly what this red teaming tool is built to handle.

To meet the security challenges of LLM agents, Snyk created a red teaming prototype built specifically for this new frontier. It’s not a repurposed content filter or generic scanner. It’s a purpose-built solution that makes it practical for developers and security engineers to test agents for real vulnerabilities during development. The goal is to bring red teaming into development, right where it belongs. Not just another filter — this is offensive AI security.

Dev-ready, security-smart: built for the people in the trenches

Snyk’s LLM red teaming prototype is designed for developers and security teams, bridging the gap between rapid AI development and practical security testing.

For developers, it eliminates the need to manually craft adversarial prompts, not to mention ensuring they’ve covered all the different types of attacks. Instead, they get fast, targeted results, ideal for catching risky prompt changes as they happen and before they ship. It fits directly into development workflows, offering immediate feedback without slowing velocity.

For security engineers, it provides consistent, repeatable tests across multiple agents. It surfaces issues early and delivers clear, reproducible evidence when prompt-based leaks occur, enabling faster remediation and easier team communication.

The LLM red teaming prototype turns LLM security from a bottleneck and into part of the everyday workflow by supporting dev agility and security assurance.

What’s next: into the next wave of AI exploits

The threat landscape is evolving fast, and so is Snyk Labs. This prototype will soon become an incubation available to try and provide feedback on. It’s designed to meet the next generation of AI-driven exploits head-on and provide value to devs as well as security teams from day one.

As attackers find new ways to manipulate agent behavior, security teams need tools that evolve just as quickly. Snyk’s LLM red teaming prototype was built for adaptability and to stay aligned with how agents are built, deployed, and compromised across diverse environments.

Know how your agent responds — before someone else figures it out for you.

Explore the rest of Snyk Labs to see more AI security demos, sign up for early access to our latest incubations, and be a part of shaping the future of security.