This article was created in partnership with Snyk as a guest blog post.

AI Security Posture Management (AI-SPM) emerges as a framework for continuously monitoring, assessing, and securing AI-native assets throughout their lifecycle.

This article examines AI-SPM's role in securing training data, models, inference endpoints, and AI pipelines at scale, offering leadership insights into this critical, emerging discipline.

Introduction: The imperative for AI-native security posture management

As organizations increasingly rely on AI-powered applications, AI Agents, and machine learning pipelines, the need for specialized security posture management has become paramount. AI Security Posture Management (AI-SPM) represents a paradigm shift in how organizations approach the security of AI-native assets, extending far beyond conventional infrastructure and application security models.

AI-SPM encompasses a comprehensive framework for the continuous monitoring, assessment, and enforcement of security policies across all AI-related assets within an organization's technology stack. Unlike traditional Cloud Security Posture Management (CSPM) or Data Security Posture Management (DSPM) solutions, AI-SPM addresses the unique security challenges inherent to AI systems: model integrity, training data poisoning, inference endpoint vulnerabilities, and the complex interdependencies between AI components and traditional software.

The distinction between AI-SPM and conventional security posture management becomes evident when examining the nature of AI assets. Traditional security frameworks operate on a relatively static infrastructure. In contrast, AI systems are inherently dynamic, with models that evolve through training, data pipelines that continuously process information, and inference endpoints that generate novel outputs. This dynamic nature requires security approaches that can adapt to the fluid characteristics of AI workloads while maintaining comprehensive visibility and control.

Recent industry developments underscore the critical importance of embedding security directly into AI development workflows. AI security cannot be retrofitted as an afterthought but must be architected from the ground up. The integration of AI/ML lifecycles into traditional software development pipelines has created hybrid environments where conventional DevSecOps practices must evolve to accommodate AI-specific security requirements. AI-SPM serves as the bridge between traditional application security and the emerging field of MLSecOps, providing organizations with the visibility, governance, and enforcement capabilities necessary to secure AI assets throughout their entire lifecycle.

Figure 1 presents AI-SPM as a unified framework organized around the four main sections of comprehensive AI security coverage. Each pillar directly corresponds to a critical capability area: Visibility & Asset Inventory establishes the foundation through automated discovery of all AI components across distributed environments; Configuration & Vulnerability Detection provides continuous assessment for AI-specific threats like model poisoning and agent-based attacks; Policy & Access Governance implements sophisticated controls that address the unique permission and compliance challenges of AI systems; and DevSecOps & MLSecOps Integration ensures security becomes an automated, embedded part of AI development workflows rather than an external checkpoint.

The central hub emphasizes that these capabilities must work together as an interconnected system—effective AI security cannot be achieved through isolated point solutions but requires coordinated orchestration across all four domains to address the dynamic, complex nature of modern AI environments. The following sections will dive deep into each of these pillars, exploring the specific technologies, methodologies, and implementation strategies that organizations need to build a comprehensive AI-SPM capability.

Section 1: Visibility & Asset Inventory for AI Systems

The foundation of effective AI-SPM rests upon comprehensive visibility into all AI-related assets within an organization's ecosystem. This challenge is magnified by the distributed nature of modern AI deployments, where models, datasets, and APIs may span multiple cloud providers, on-premises infrastructure, and edge environments.

Automated discovery of AI assets

Modern AI-SPM platforms must employ sophisticated discovery mechanisms to identify and catalog the full spectrum of AI components, including machine learning models, training datasets, inference endpoints, and the complex web of their dependencies. This is compounded by the phenomenon of "shadow AI," where teams deploy AI tools and models without proper governance. Unlike traditional shadow IT, shadow AI can emerge through simple API integrations or model downloads from public repositories like Hugging Face. This pervasiveness makes automated discovery essential for maintaining comprehensive visibility.

Model provenance and lineage tracking

Maintaining comprehensive model provenance is critical to AI security. A model's provenance encompasses security-relevant information, including the source and integrity of its training data, the environment used for training, and the complete chain of transformations, including fine-tuning. Effective provenance tracking requires integration with the entire AI development lifecycle to capture technical metadata and governance information. This lineage enables organizations to understand the complete dependency graph of their AI assets, which is crucial for impact assessment, compliance reporting, and incident response.

AI Bill of Materials (AI-BOM) implementation

Drawing inspiration from the Software Bill of Materials (SBOM), the AI Bill of Materials (AI-BOM) represents a vital approach to AI asset transparency. An AI-BOM should provide a comprehensive inventory of all AI components, extending beyond a simple list to include critical security and governance metadata. This includes model identification, dependency relationships, security assessment results, compliance status, and licensing information. This foundational capability enables organizations to implement comprehensive governance frameworks while maintaining the agility required for innovation.

Integration with development workflows

The effectiveness of AI asset inventory systems depends heavily on their integration with existing development workflows. AI-SPM platforms must provide seamless integration with diverse environments like Jupyter notebooks and cloud-based ML platforms, minimizing friction for developers. This is exemplified by the emergence of AI-powered coding assistants, which generate code at unprecedented speeds and can potentially introduce vulnerabilities just as quickly. Embedding security context directly into the AI development workflow enables real-time security assessment and remediation guidance within the development environment itself.

Section 2: Configuration, Vulnerability & Misuse Detection

Detecting misconfigurations, vulnerabilities, and misuse within AI systems is a complex challenge. AI-specific security problems can emerge from subtle interactions between models, data, and operational environments, requiring sophisticated detection mechanisms.

Misconfiguration detection in AI infrastructure

AI system misconfigurations present unique security risks. The most critical often involve inference endpoints inadvertently exposed to public networks without proper authentication, potentially leading to unauthorized data extraction, model abuse, or denial-of-service attacks. AI-SPM platforms must continuously monitor for common misconfiguration patterns, including APIs deployed without authentication, model-serving endpoints with excessive network exposure, and data pipelines with overly permissive access to sensitive datasets.

Model poisoning and drift detection

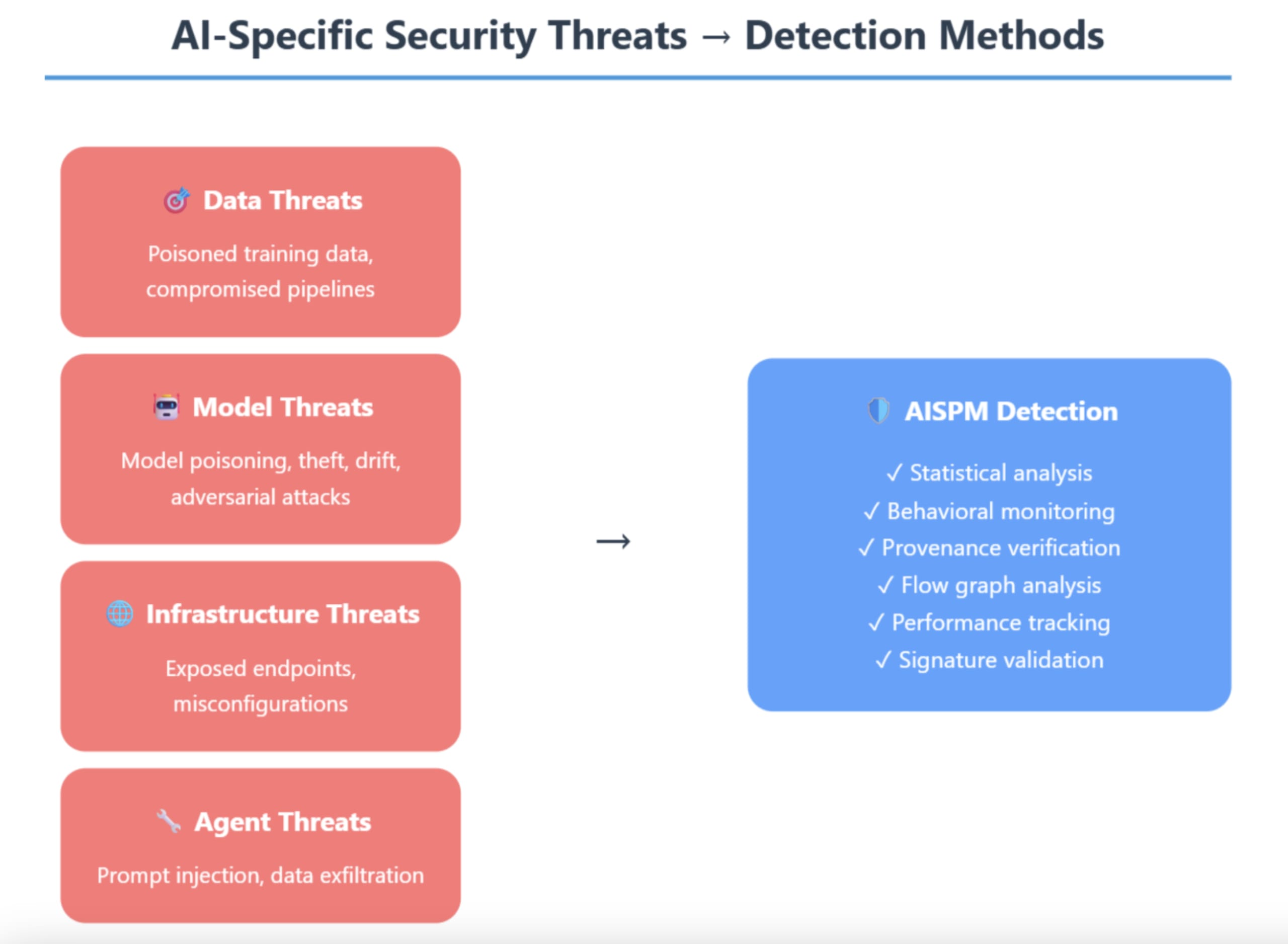

Model poisoning is an insidious attack where adversaries manipulate training data to introduce malicious behavior. Traditional security scanning is inadequate for detecting this, as the malicious behavior is embedded within the model's learned parameters. AI-SPM platforms must implement sophisticated model analysis, including statistical analysis of outputs, adversarial testing, and provenance verification.

Model drift, where models lose effectiveness over time, can also create security vulnerabilities. For example, a fraud detection model that experiences drift might fail to identify new attack patterns. Detecting drift requires continuous monitoring of performance metrics, input data distributions, and output patterns.

Figure 2 shows a high-level mapping of detection capabilities for some AI threats. Categorizing threats into data, model, infrastructure, and agent-based attacks demonstrates why traditional security tools are insufficient for AI environments. The arrow flow to AI-SPM detection methods shows how the framework provides targeted solutions like statistical analysis for model poisoning, behavioral monitoring for agent threats, and provenance verification for supply chain attacks. This visualization helps security professionals understand both the scope of AI-specific risks and the comprehensive detection capabilities needed to mitigate them.

Agent-based exfiltration and hidden attacks

The emergence of Agentic AI systems has introduced new categories of security threats. AI agents can potentially exfiltrate sensitive information through subtle manipulations of their normal operational behavior. A pioneering approach to detecting these patterns involves modeling the complete flow graph of agent systems and their tool interactions to identify potentially dangerous sequences. This analysis goes beyond examining individual actions to understand the cumulative security impact of agent behavior. A "lethal trifecta" scenario can emerge when an agent has access to untrusted inputs, sensitive data, and external tools, which an attacker could manipulate to exfiltrate information or perform unauthorized actions.

Artifact integrity and supply chain security

The AI ecosystem relies heavily on pre-trained models and datasets from external repositories, creating significant supply chain security risks. AI-SPM platforms must implement comprehensive artifact integrity verification, including cryptographic signature validation, vulnerability scanning of model dependencies, and behavioral analysis of pre-trained models to identify potential malicious functionality.

OWASP Top 10 for LLMs integration

The Open Web Application Security Project (OWASP) has identified ten critical security risks for Large Language Model applications. An effective AI-SPM strategy must incorporate detection capabilities for each of these risk categories, from Prompt Injection and Training Data Poisoning to Insecure Plugin Design and Model Theft.

Section 3: Policy, access governance & remediation

The governance of AI systems requires sophisticated policy frameworks that extend beyond traditional access control models. Effective AI governance must balance security and compliance with the need for innovation, creating policies that adapt to the evolving nature of AI.

Model permissions and access control frameworks

Traditional access control models are inadequate for governing AI systems. AI-specific access control requires considering multiple dimensions, including model capabilities, data sensitivity, user roles, and operational context. Modern AI-SPM platforms often implement attribute-based access control (ABAC) models designed for AI assets, which can evaluate access requests based on dynamic attributes. This allows for granular control, such as granting a data scientist access to a model for research during business hours but not permission to deploy it to production.

Data access controls and privacy protection

The intimate relationship between AI models and their training data creates complex governance challenges. AI systems can potentially extract and recombine information from training data in unexpected ways. AI-SPM platforms must implement data governance controls that can track data lineage, enforce privacy protection requirements like anonymization, and monitor for potential privacy leakage through model outputs.

API entitlements and service governance

The proliferation of AI services exposed through APIs creates new governance challenges. API governance for AI services must address rate limiting, content filtering, usage monitoring, and cost management. AI-SPM platforms must provide flexible API governance capabilities that can adapt to the diverse ways organizations expose and consume AI services.

DevSecOps pipeline integration and automated enforcement

Integrating AI security controls into existing DevSecOps pipelines requires extending traditional security approaches to accommodate model training, data pipeline assessment, and inference endpoint testing. Embedding security testing directly into AI development workflows makes security assessment an integral part of the development process rather than an external checkpoint. This integration enables real-time security feedback, automated remediation suggestions, and continuous validation.

Section 4: Integration with DevSecOps & MLSecOps

The convergence of traditional DevSecOps with the emerging discipline of MLSecOps is a significant challenge. This integration requires reconciling the rapid, experimental nature of AI development with the rigorous security controls of enterprise software development.

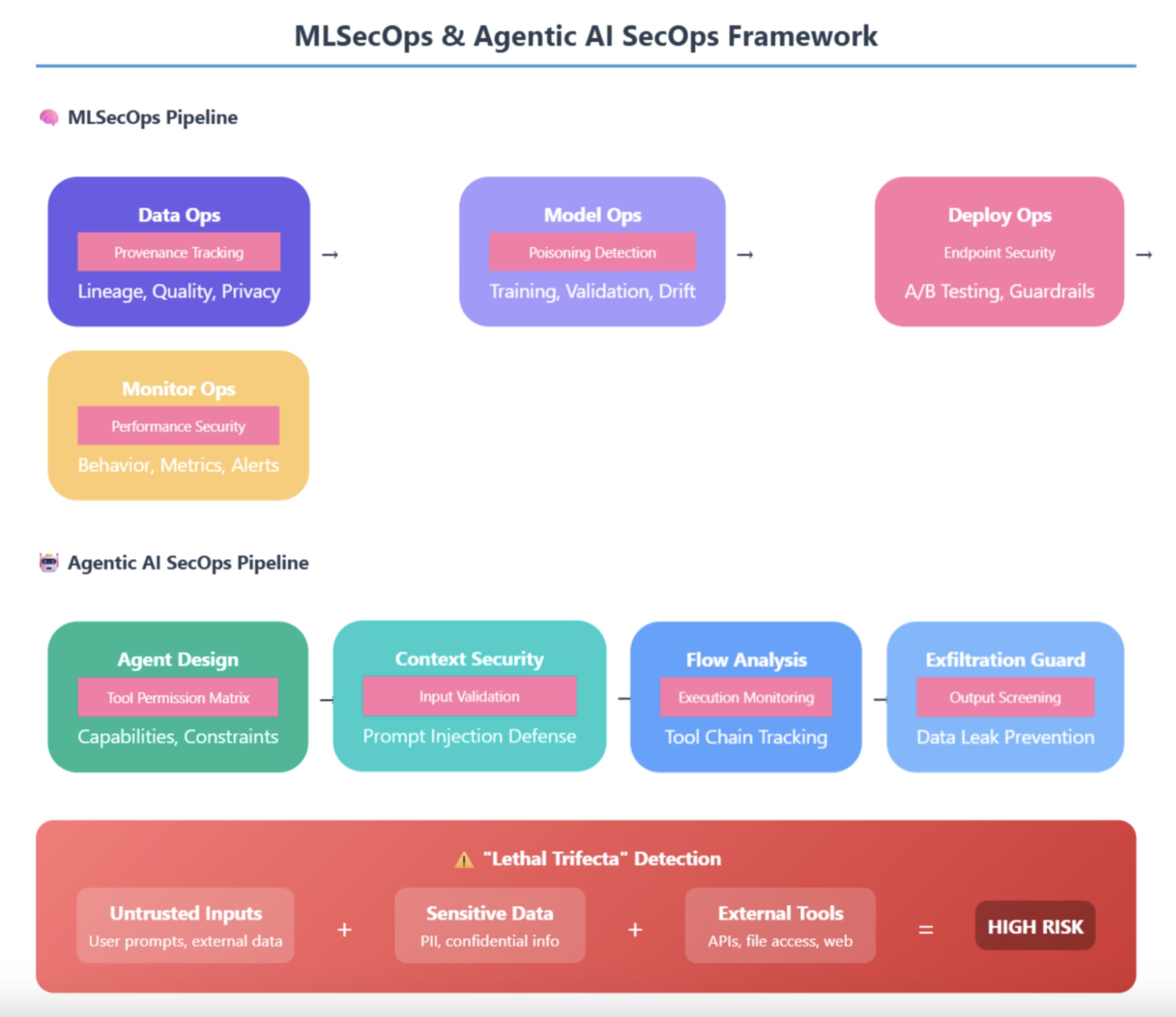

CI/CD integration architecture

Integrating AI security into CI/CD pipelines requires a new architectural approach. AI pipelines must accommodate dynamic elements like lengthy model training processes, data validation workflows, and complex deployment strategies like A/B testing. An effective integration provides specialized pipeline stages for AI security assessment, including model vulnerability scanning, data pipeline security validation, and compliance verification.

Figure 3 showcases the evolution from traditional DevSecOps to specialized MLSecOps and the emerging field of Agentic AI SecOps. The upper pipeline demonstrates ML-specific security operations, including data provenance tracking, model poisoning detection, secure deployment with A/B testing guardrails, and performance-based security monitoring. The lower pipeline introduces Agentic AI SecOps—a cutting-edge discipline that addresses the unique risks of autonomous AI agents through tool permission matrices, prompt injection defenses, execution flow monitoring, and output screening for data exfiltration. The highlighted "Lethal Trifecta" section visually represents the article's key insight about the dangerous convergence of untrusted inputs, sensitive data access, and external tool capabilities that can enable sophisticated agent-based attacks. This diagram positions your organization's thinking at the forefront of AI security, moving beyond basic MLOps to address the complex security challenges of next-generation agentic systems.

Guardrail enforcement at build and deployment stages

Implementing security guardrails within AI development pipelines requires a careful balance between enforcement and velocity. Build-stage guardrails should include model architecture validation, training data security assessment, and dependency security scanning. Deployment-stage guardrails focus on operational concerns, including inference endpoint security configuration, access control verification, and monitoring configuration.

Static and dynamic analysis integration

Security assessment of AI systems requires both static analysis of model artifacts and dynamic analysis of system behavior. Static analysis extends beyond code to include model architecture and training data. Dynamic analysis involves adversarial testing, performance testing under security constraints, and behavioral analysis to identify anomalies. Integrating these requires careful orchestration to ensure comprehensive coverage.

Continuous feedback loops and adaptive security

The dynamic nature of AI systems requires security approaches that can adapt through continuous feedback. This involves monitoring model performance, input and output patterns, and resource usage. These feedback mechanisms must be sophisticated enough to distinguish between legitimate changes in system behavior and potential security issues, allowing for adaptive learning that refines security assessment and response over time.

Section 5: Future outlook and emerging trends

AI Security Posture Management is rapidly evolving. Several trends will likely shape its future:

The increasing sophistication of AI agents and autonomous systems will require more advanced security assessment and monitoring capabilities.

The integration of AI security with broader enterprise security architectures will deepen, with AI-SPM platforms becoming integral components of security operations centers.

Regulatory requirements for AI security will continue to evolve, demanding more sophisticated compliance assessment and reporting from AI-SPM platforms.

The emergence of AI-powered security tools will create new opportunities for more sophisticated and automated AI security management.

As the industry matures, we can expect standardization of AI security assessment techniques and the evolution of AI security into a recognized professional discipline. The future of AI-SPM will be characterized by increasing automation, more sophisticated threat detection, and deeper integration with development workflows.

Organizations that invest early in comprehensive AI-SPM capabilities will be better positioned to securely leverage AI for a competitive advantage. The convergence of AI development and cybersecurity represents one of the most significant technological challenges of our time. Through comprehensive AI Security Posture Management, organizations can successfully navigate this challenge, securing their AI-native assets while unlocking the innovation that AI technologies enable.

Ready to see how a unified approach can secure your organization's AI assets from training to deployment? Download the white paper: What’s Lurking in Your AI? A Deep Dive into AI Security Posture Management (AISPM).